Most real-time production level Pentaho ETL jobs are of a complex design which will result in run time failures. Jobs that are scheduled to run on a predefined time slot may fail due to data, network, or database-related issues.

So while monitoring job execution manually we need to keep a close look at the log file, Which is a tedious task. While monitoring these complex jobs it may get error-prone.

The solution to this problem is by sending an email notification that will provide information related to the running status of a job and sending an email in case of failure occurs. Resulting in lesser response time and making the job run again from the failure point in a short duration of time with greater accuracy.

Also, the convenient way to share reports is to set up an email server that can send reports to recipients. This feature works with the report scheduling feature to automate the process of emailing reports to your user community. Setting up an email server is not required. If you want to get started quickly or do not have information about your email server, skip this for now. You can always come back to it later.

Consider this kind of complex job, In this job, it will be very difficult to find whether the job is running or it gets failed.

To deal with such a situation, one option is that we can send an email upon failure or success along with a log file attached to it. So that it will be helpful for clients and vendors to identify and analyze the issues faced during the scheduled run. Below is the example of configuring the email notification in Pentaho Data Integration.

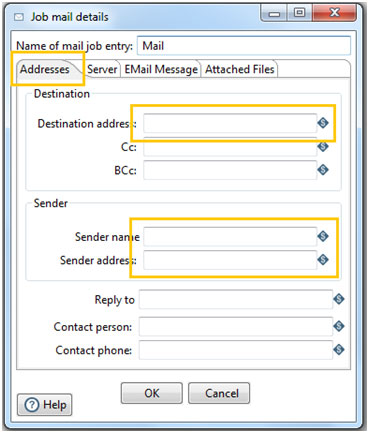

For sending an email notification, we have a very useful Mail component provided by PDI.

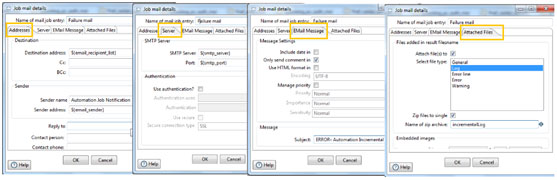

Mail Component

Mail job entry allows us to send a text or HTML email with optional file attachments. It can be used to indicate both a job failure or success. It will send emails to clients and vendors to inform them at the end of a successful/unsuccessful load along with a log file supplied for their reference.

As it needs to send an email, we need to provide SMTP server details.

You can attach files to your email messages such as error logs and regular logs. In addition, logs can be zipped into a single archive for convenience.

The important options here are-

Destination addresses - Email addresses of a receiver that will be receiving notifications related to job execution.

Sender name - Name of sender which you want to appear on email.

Sender Address - Email address through which email notifications will be sent, Typically set for SMTP.

Server Tab

SMTP Server- Specify the SMTP Server IP address.

Port- The port on which the SMTP Server is running

Email Message Tab

Subject- Subject of email notifications.

Comment- Body of email notifications.

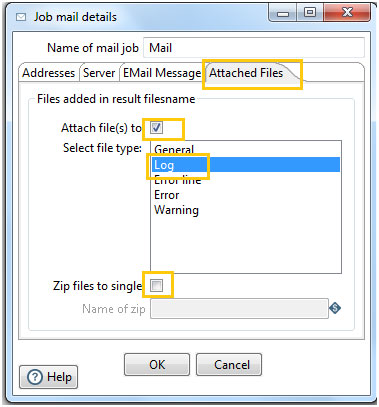

Another important tab is Attached Files-

Specify the details to send attachment with email notification.

Attach files to message?

Enable to attach a file to your email message

Select file type

The files to send are defined within the internal files result set. Every file in this list is marked with a file type and you can select what type of file you want to send (see also the tips at the end of this document):

- General

- Log

- Error line

- Error

- Warning

Zip files to single archive?

Enable to have attachments archived in a zip file

Name of the zip archive

Define the filename of your zip archive

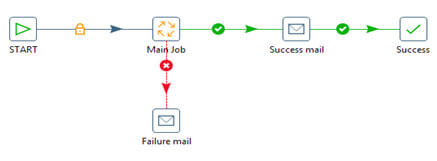

Here is an example of creating logging for each Transformation/Jobs into ETL PDI and setup

Email notification failure on each component level.

Above is the Main Automation job in which complete processing is done, and we need to log details for jobs and transformations inside this job. So we can create a wrapper job called a master automation job which will have the components of success and failure email sending along with log files.

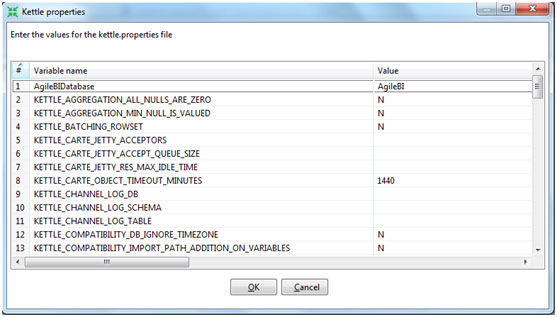

Here are the detailed snapshots of the configuration of email notifications. As the details specified in configurations are passed dynamically through Kettle Properties which is good practice.

We can open kettle properties by pressing CTRL+ALT+P

Specify the details which need to pass dynamically to the Master Automation job.

Here variable name includes the name of a variable which we have placed in ${variable_name} in Mail Component along with the value of it.

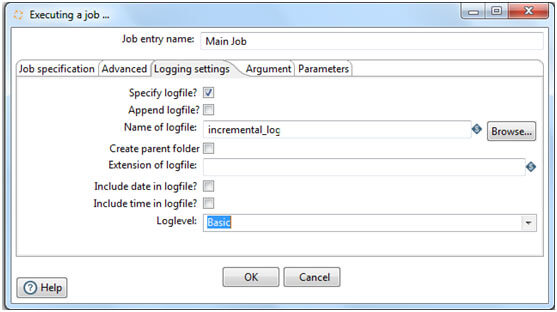

To activate jobs and transformation level logging into a log file, we need to check the checkbox indicating Specify LogFile.

ALSO READ

Below are the steps we need to follow to enable job and transformation level logging.

1. Double Click on a job for which we need log details to be populated into a log file which we are sending to clients and vendors.

Logging Settings tab

By default, if you do not set logging, Pentaho Data Integration will take log entries that are being generated and create a log record inside the job. (For example, suppose a job has three transformations to run and you have not set logging(This line found on 72 results)). The transformations will not output logging information to other files, locations, or special configurations. In this instance, the job executes and puts logging information into its master job log.

In most instances, it is acceptable for logging information to be available in the job log. For example, if you have load dimensions, you want logs for your load dimension runs to display in the job logs. If there are errors in the transformations, they will be displayed in the job logs. If, however, you want all your log information kept in one place, you must set up logging.

Specify logfile?

We need to check this checkbox to specify a separate logging file for the execution of this job.

Append logfile?

Enable to append to the logfile as opposed to creating a new one.

This will append the logs of different jobs and transformations enclosed in the Main Automation job instead of creating a new log file for each job and transformation

Name of logfile

The directory and base name of the log file; for example C:\logs

In our example we have set it to incremental_log.

Extension of logfile

The file name extension; for example, log or txt by default it will be of .log extension.

Loglevel

This is the most important dropdown that will help to write logs depending upon the level of abstraction specified.

It can be Basic,Detail,Debug or Row level etc.

Conclusion

Here, we've created an automated way of sending success/failure email notifications to clients and vendors by specifying the mail configuration details in the Kettle Properties file that will send an email as soon as any job, connection, or database running job failure. Issue without wasting time.

Recent Blogs

Categories