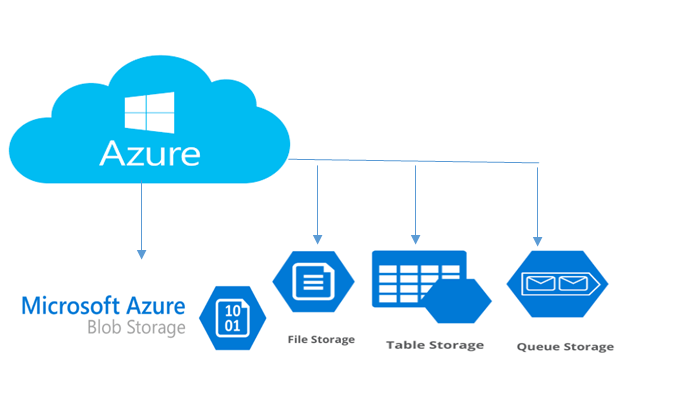

Azure Storage is Microsoft's cloud storage solution for modern data storage scenarios. Azure Storage offers a massively scalable object store for data objects, a file system service for the cloud, a messaging store for reliable messaging, and a NoSQL store.

Features:

- Durable and highly available

Redundancy ensures that your data is safe in the event of transient hardware failures. You can also opt to replicate data across data-centres or geographical regions (technically called as Geos) for additional protection from local catastrophe or natural disaster. Data replicated in this way remains highly available in the event of an unexpected outage.

-

Secure

All data written to Azure Storage is encrypted by the service. Azure Storage provides you with fine-grained control over who has access to your data.

-

Scalable

Azure Storage is designed to be massively scalable to meet the data storage and performance needs of today's applications.

-

Managed

Microsoft Azure handles hardware maintenance, updates, and critical issues for you.

-

Accessible

Data in Azure Storage is accessible from anywhere in the world over HTTP or HTTPS. Microsoft provides client libraries for Azure Storage in a variety of languages, including .NET, Java, Node.js, Python, PHP, Ruby, Go, and others, as well as a mature REST API. Azure Storage supports scripting in Azure PowerShell or Azure CLI. And the Azure portal and Azure Storage Explorer offer easy visual solutions for working with your data.

Types

Choosing Blob storage

Strong consistency

When an object is changed, it is verified everywhere for superior data integrity, ensuring you always have access to the latest version.

Object mutability

Get the flexibility to perform edits in place, which can improve your application performance and reduce bandwidth consumption.

Multiple blob types

Block, page and append blobs give you maximum flexibility to optimise your storage to your needs.

Easy-to-use geo-redundancy

Automatically configure geo-replication options in a single menu, to easily empower enhanced global and local access and business continuity.

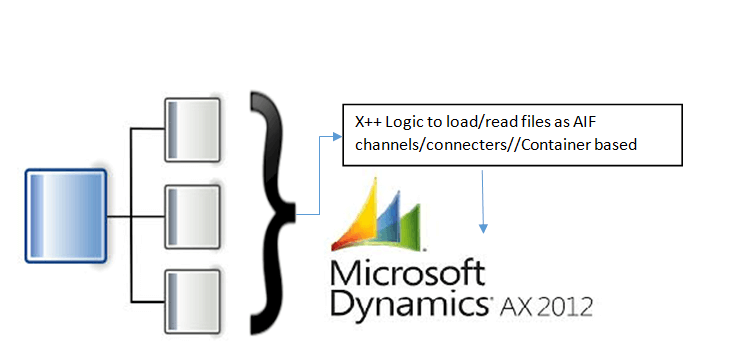

Deprecation of Shared folder/directory based structure

With the usher of Azure/cloud computing the concept of shared folder based repository where you can drop files and make your code access the same, has got deprecated. Previously till Ax2012 – this was simple: in a scenario where you can keep your files periodically getting dumped in a shared location and letting your AIF or file downloader code to consume the same. You need to read the file and take up rows by rows in a container based data type variable and then push it for further processing.

Similar architecture was also there for File based AIF exchanges.

With Azure as the environment, this concept of shared architecture is deprecated. You now need to base your codes reading the content from an azure container and then sending it to D365 for further execution.

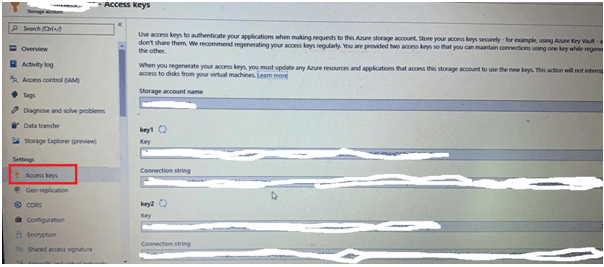

Azure Access Keys

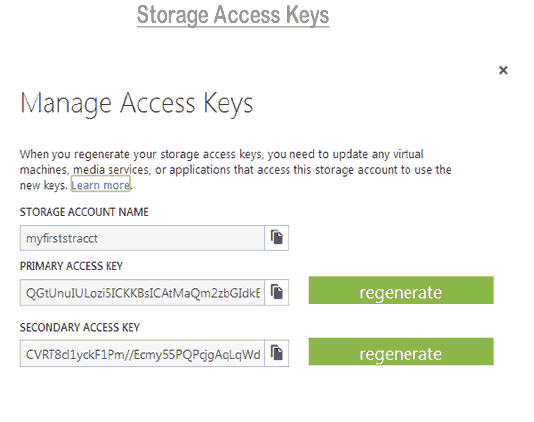

When we create a storage account, Azure generates and assigns two 512-bit storage access keys to the account. These keys are required for authentication when accessing the storage account. Two access keys are generated, one is the primary access key and another is the secondary access key. Remember, these keys can be regenerated whenever required. When you generate a new key, the earlier ones are retired.

In the Management Portal, click on the Manage Access Keys icon located at the bottom of the dashboard screen. The Manage Access Keys dialog is displayed which is shown below. Use this dialog to Copy and regenerate the keys.

To access Azure storage services from an application, we need to provide an account name and access key in the connection string. Copy the access key from the ‘Manage Access Keys’ dialog to use it in the connection string.

Azure storage emulator

The Microsoft Azure storage emulator is a tool that emulates the Azure Blob, Queue, and Table services for local development purposes. You can test your application against the storage services locally without creating an Azure subscription or incurring any costs. When you're satisfied with how your application is working in the emulator, switch to using an Azure storage account in the cloud.

Securing the storage account

Regenerating of the access keys is required to ensure security of storage accounts. Azure provides two access keys so that while we regenerate a new key, our services which are using the storage account will not be interrupted. For smooth functioning, the following steps are recommended for regenerating a new key.

a. Before regenerating a new primary access key, we should make sure that all applications and services use the secondary access key of the related storage account. This has to be done because as soon as we generate a new primary access key, the application(s) will not be able to access the storage account with the previous access key.

b. Click on the regenerate button next to the primary access key and 'click Yes' to confirm. A new key will be generated.

c. Update connections strings in all the applications and services with the new access key. Your applications will now be able to use the storage account.

d. To regenerate the secondary access key, click on the regenerate button next to the secondary access key.

Easy code to download file

You can easily get the URL of the file and use it in new Browser().navigate(fileUrl).

Example:

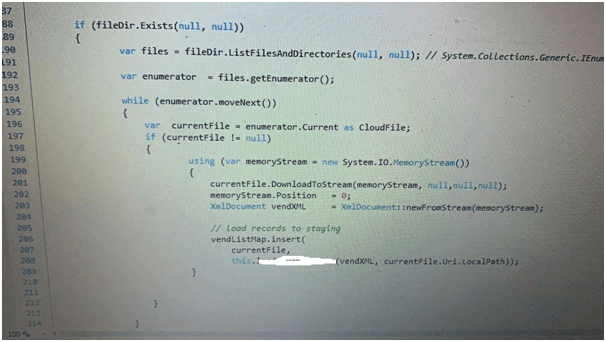

Think of a situation where you need to download files from an azure file location by using a periodic job, read the file content and push to Dynamics 365 finance and operations.

The following code explains the same:

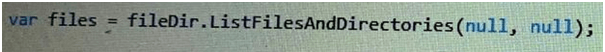

Here fileDir is a variable of type: Microsoft.WindowsAzure.Storage.File.CloudFileDiretory

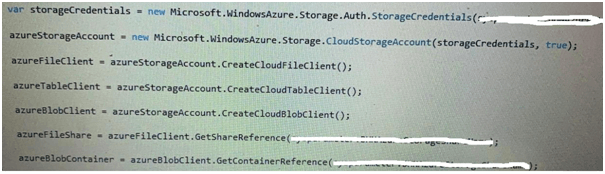

And could be initialized as:

fileDir = FileStorageHelper.getFileDirectory(

Here ‘FileStorageHelper’ is a tailor made helper class that can be framed to construct with user Ids, password, connection strings, etc.

Next loop through the obtained list of files:

Here, in order to improvise the process speed, we are not inserting the files right into AX tables, rather the file rows are getting stored in a Map, for later processing.

Logic app

Suppose that you have a tool that gets updated on an Azure website. which acts as the trigger for your logic app. When this event happens, you can have your logic app update some file in your blob storage container, which is an action in your logic app.

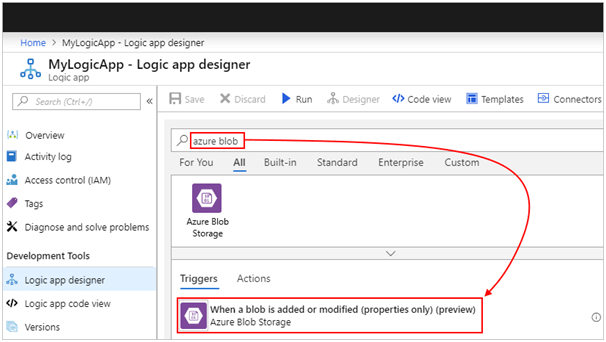

Add blob storage trigger

In Azure Logic Apps, every logic app must start with a trigger, which fires when a specific event happens or when a specific condition is met. Each time the trigger fires, the Logic Apps engine creates a logic app instance and starts running your app's workflow.

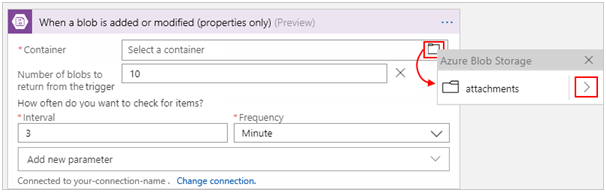

This example shows how you can start a logic app workflow with the When a blob is added or modified (properties only) trigger when a blob's properties gets added or updated in your storage container.

- In the Azure portal or Visual Studio, create a blank logic app, which opens Logic App Designer. This example uses the Azure portal.

- In the search box, enter "azure blob" as your filter. From the triggers list, select the trigger you want.

Next, check out the following sequence of actions:

- In the Container box, select the folder icon.

- In the folder list, choose the right-angle bracket ( > ), and then browse until you find and select the folder you want.

Select the interval and frequency for how often you want the trigger to check the folder for changes. Click to save the changes.

How does D365 fit here?

You can select ‘Add an action’ >> ‘Get Blog content’ and then use D365 entities to read file content and initialize to D365. A good and faster way to integrate.

Recent Blogs

Categories